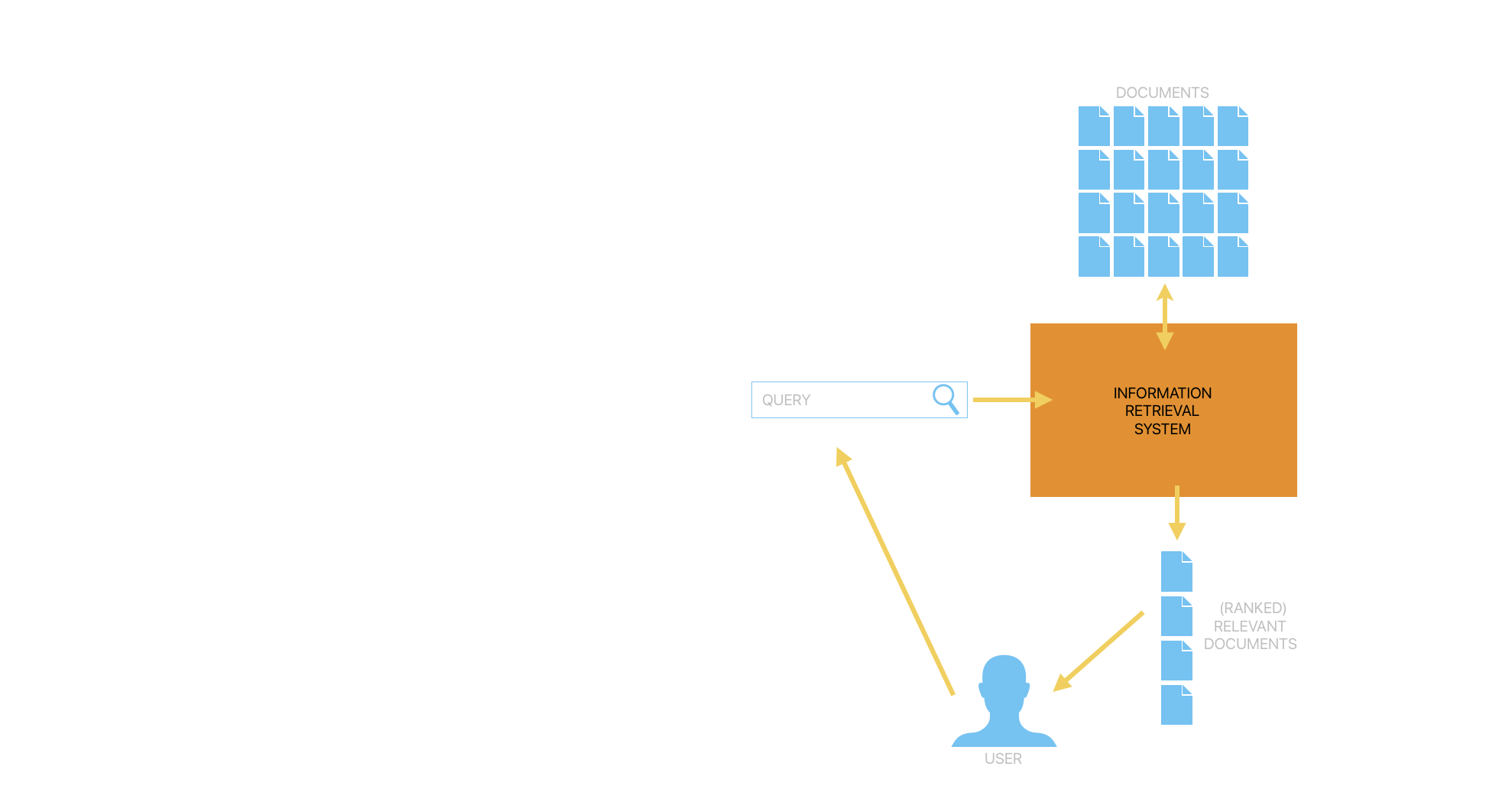

An information retrieval (IR) system is software which facilitates the organization, retrieval, and ranking or evaluation of relevant documents from a digital repository based on input from a user. People interact with IR systems constantly--to search for a song from a streaming service, find a restaurant in a new city, or find the receipt for an order in their email inbox. In a blog post about neural information retrieval, Meta AI Research said IR is "arguably among the most defining challenges of the information age".

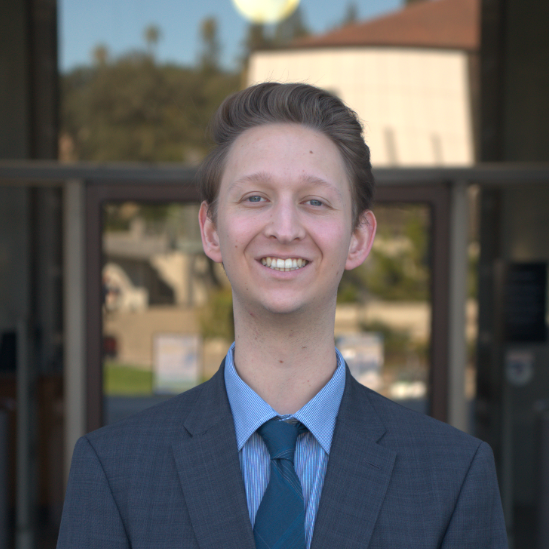

In recent years, there has been an explosion of development in using deep neural networks and deep learning methods for IR tasks. Instead of relying on heavily pre-defined, handcrafted features for query-document matching and ranking, neural network models can learn relationships between words and phrases, patterns, and hierarchical structure. However, using deep learning comes with a high computational cost, and require large amounts of training data before they can be deployed.

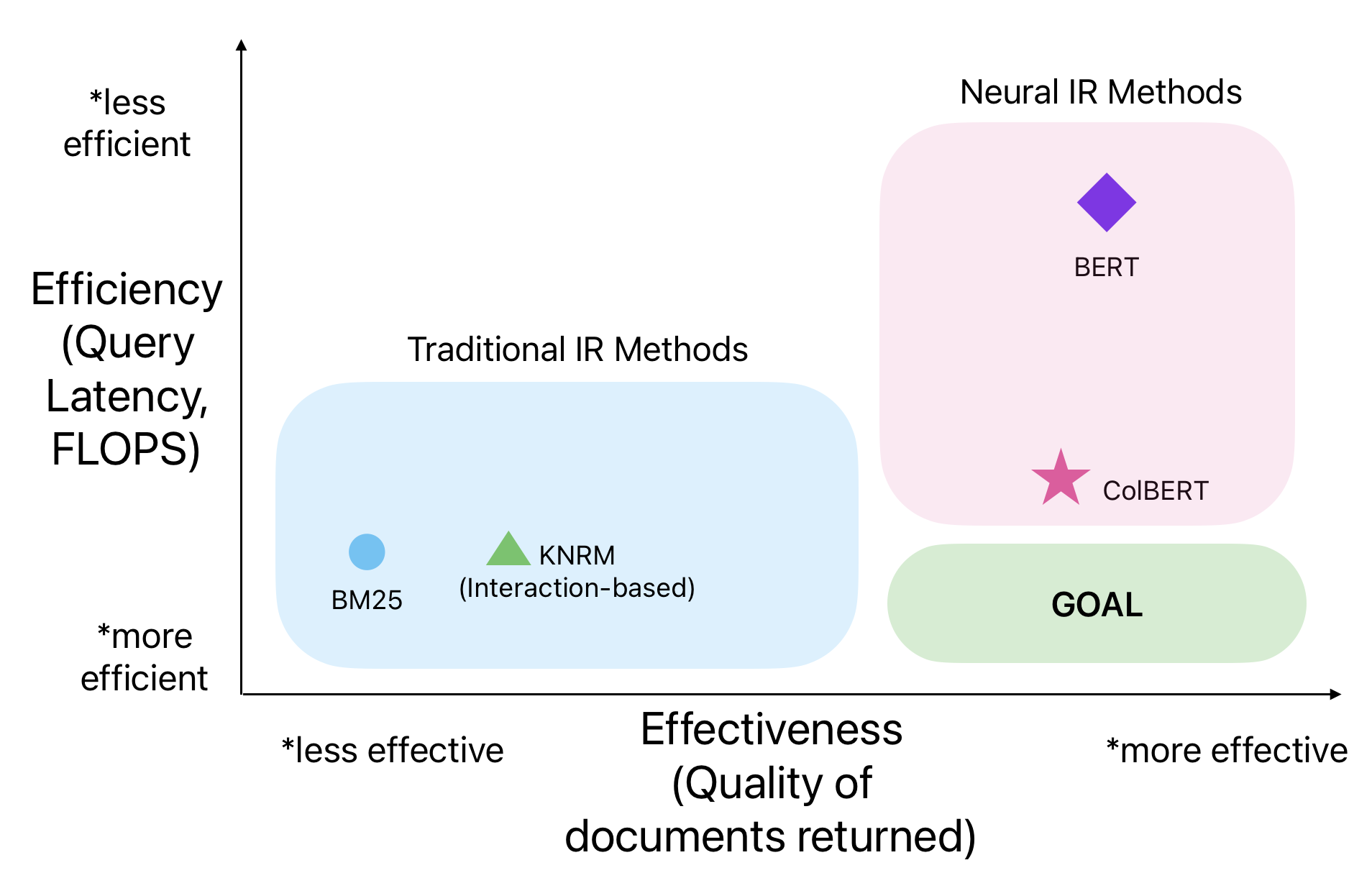

In this project, our task is to evaluate existing methods for IR, and attempt to narrow the efficiency-effectiveness gap. In other words, we want to develop a method which produces high-quality ranking results as efficiently as possible.

To accomplish this task, we evaluated several recently published neural information retrieval methods and made improvements upon them.

Efficiency:

Effectiveness:

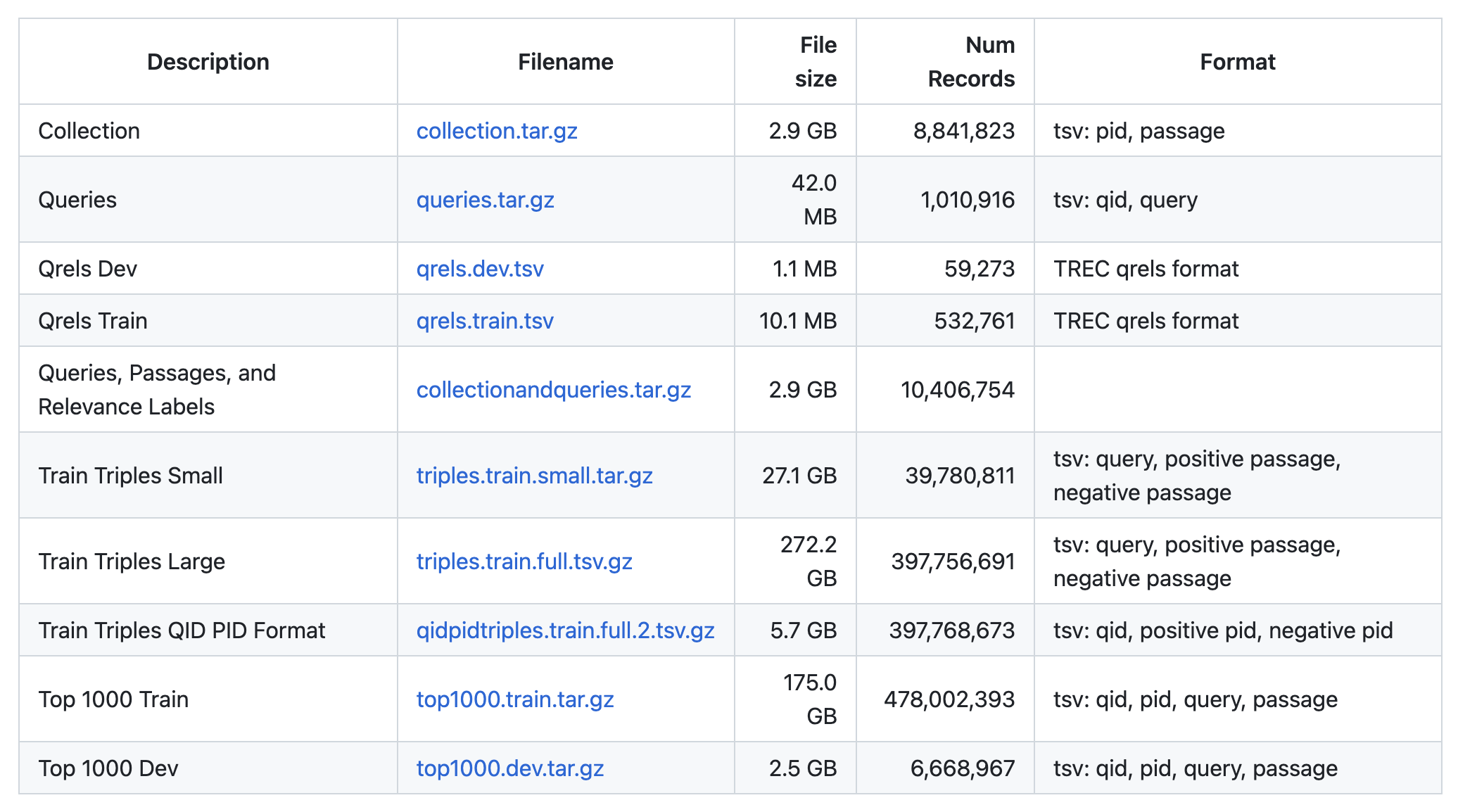

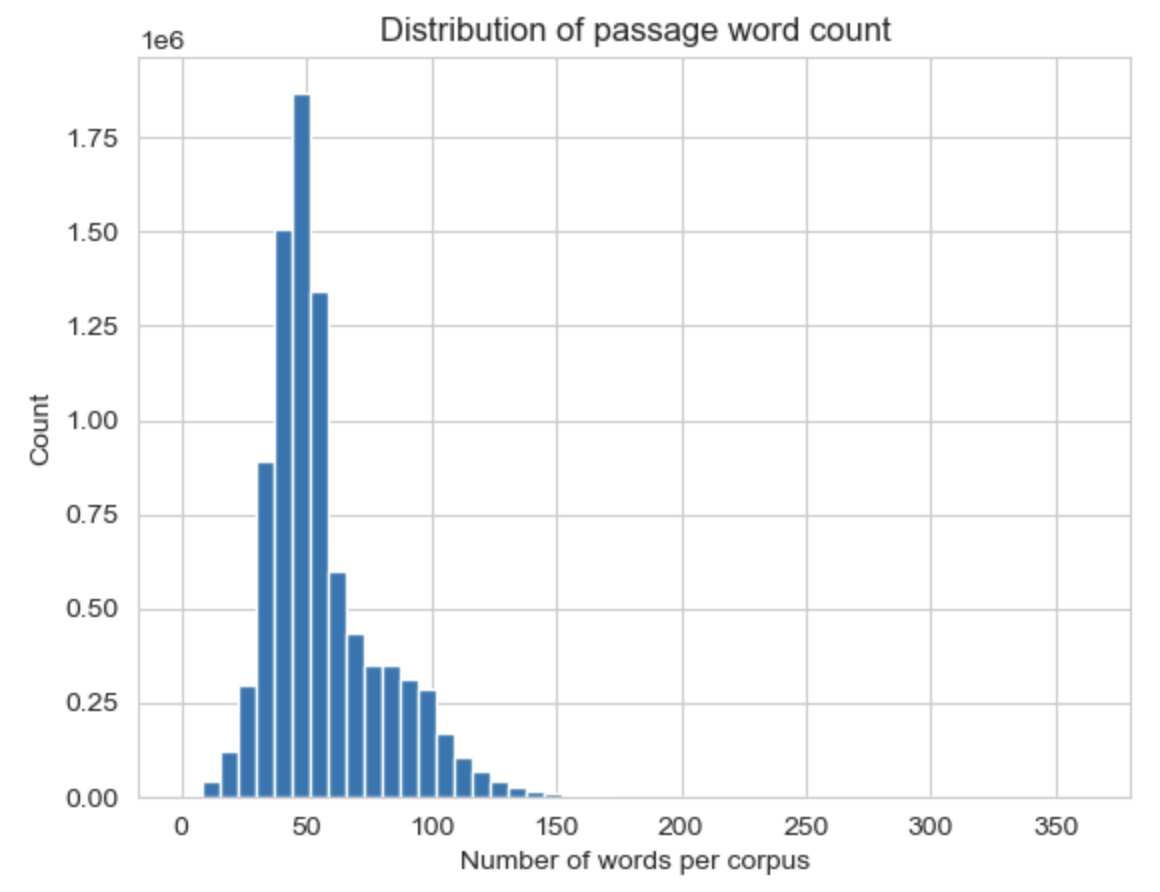

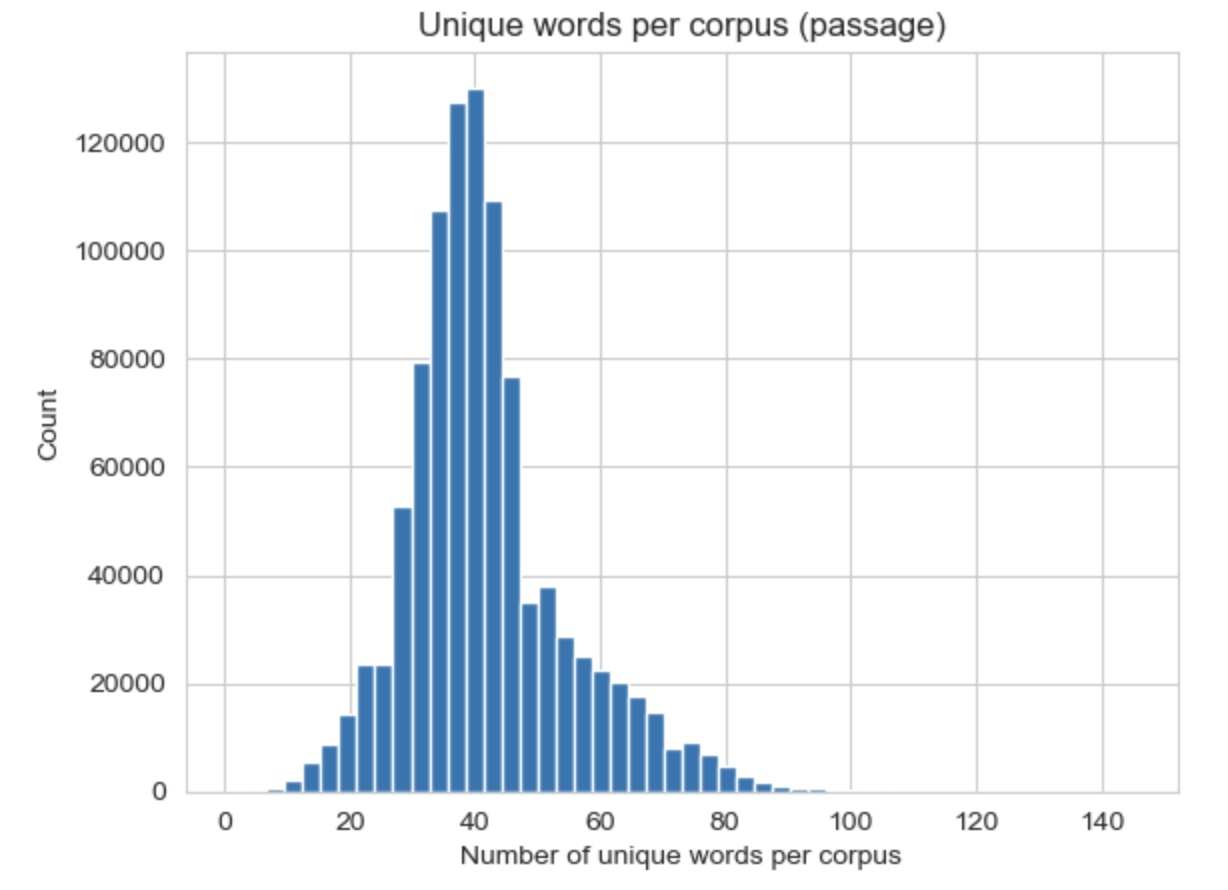

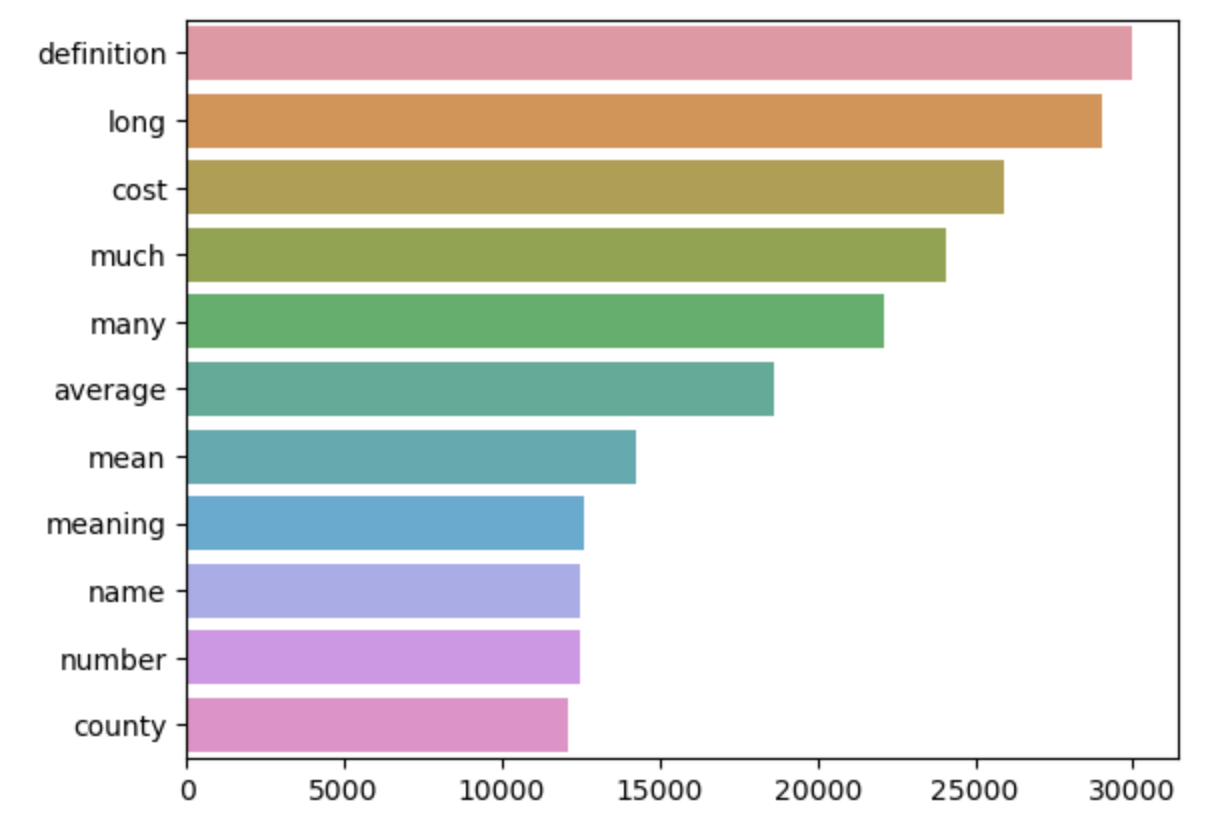

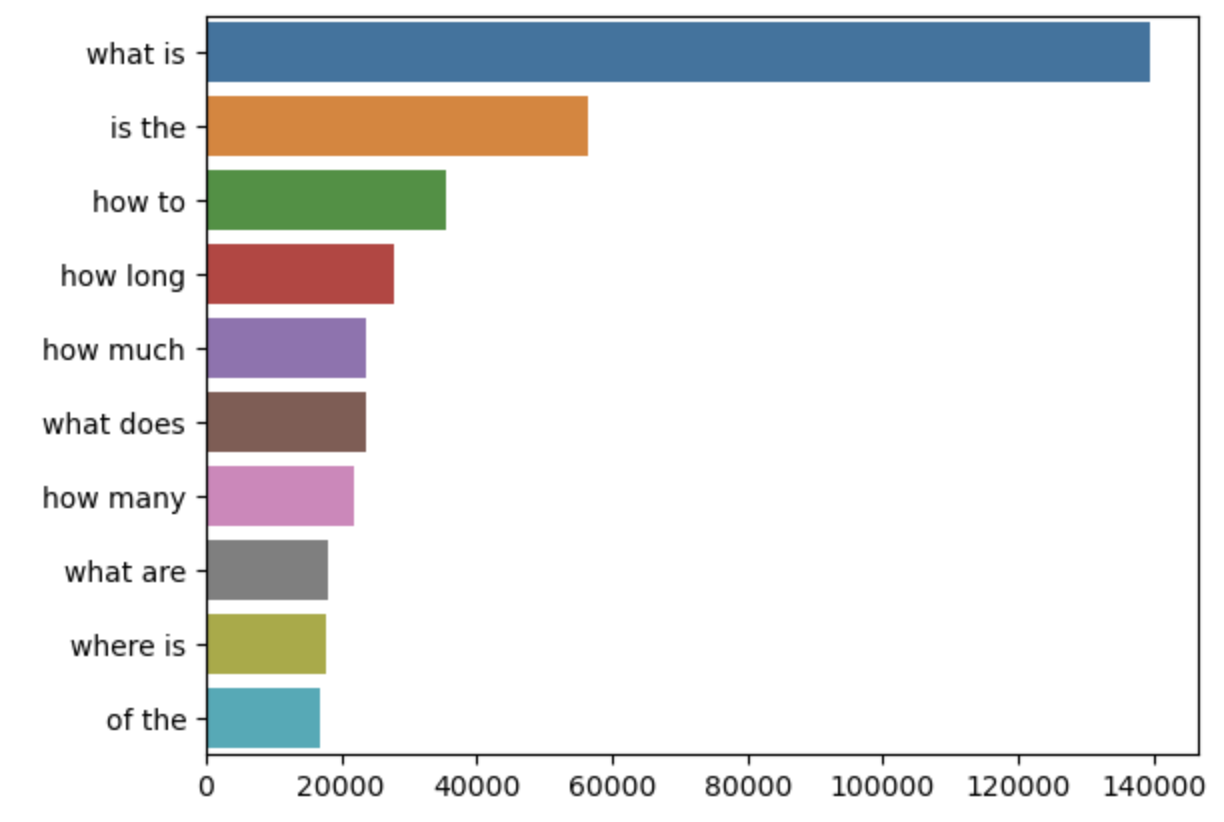

We used data from the MS MARCO collection of datasets provided by Microsoft for our project.

We use this dataset for several reasons...

Query: how many kiloliters are in a liter

Passage: How many kilojoules should an 18 year old girl have each day? You should be safe with around 8000-9000 kilojoules per day but it is highly relative to you personally and factors such as height, activity, age, gender and weight are inclus … ive in calculating how many kilojoules you should have.

Query: who were the maccabees

Passage 1: For other uses, see Maccabees (disambiguation). The Maccabees, also spelled Machabees (Hebrew: מכבים or מקבים, Maqabim; Latin: Machabaei or Maccabaei; Greek: μακκαβαῖοι, Makkabaioi), were the leaders of a Jewish rebel army that took control of Judea, which at the time had been a province of the Seleucid Empire.

Passage 2: The descendants of Mattathias. The Maccabees, also spelled Machabees (Hebrew: מכבים or מקבים, Maqabim; Latin: Machabaei or Maccabaei; Greek: μακκαβαῖοι, Makkabaioi), were the leaders of a Jewish rebel army that took control of Judea, which at the time had been a province of the Seleucid Empire.

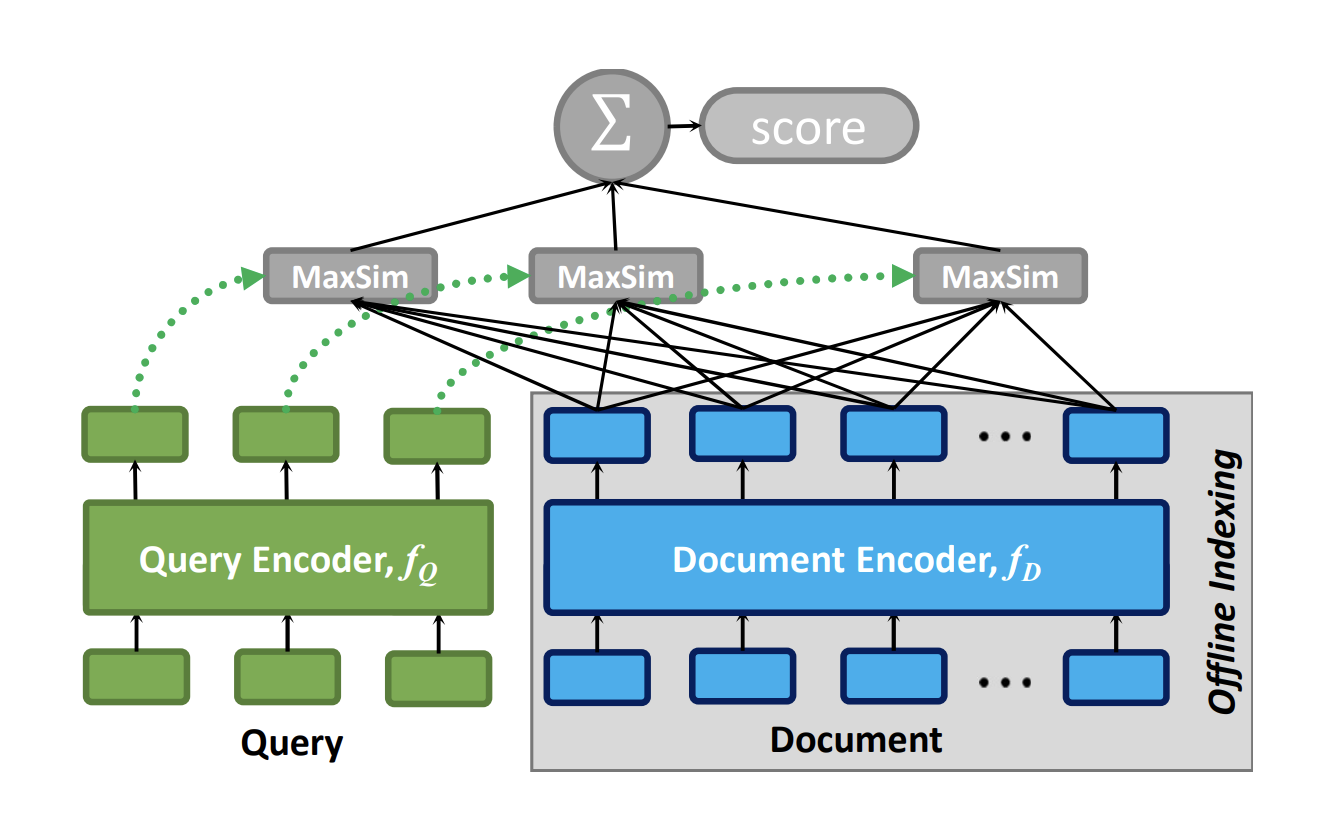

ColBERT is a ranking model which uses "contextualized late interation" with BERT. This method that leverages the effectiveness of deep learning models (specifically BERT) but is more efficient because it decreases the computational demand at the time of query processing using an architecture they call late interaction architecture.

BERT, which was at one point the state-of-the-art neural information retrieval method as it offered more effective results than existing methods, uses "all-to-all interaction", where a neural network is fed inputs that reflect the similarity between each word and/or phrase in the query and documents. In order to speed up query processing, ColBERT encodes the query and document using BERT and then performs an interaction step where the similarity is computed. By delaying the interaction between queries and documents, document representation can be pre-computed offline. This makes the system much more efficient, but still offers the effectiveness of contextualized term embedding.

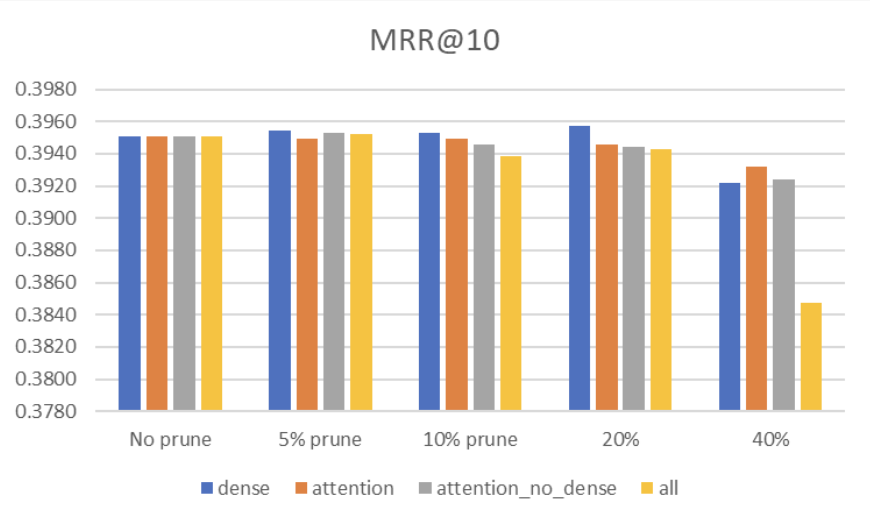

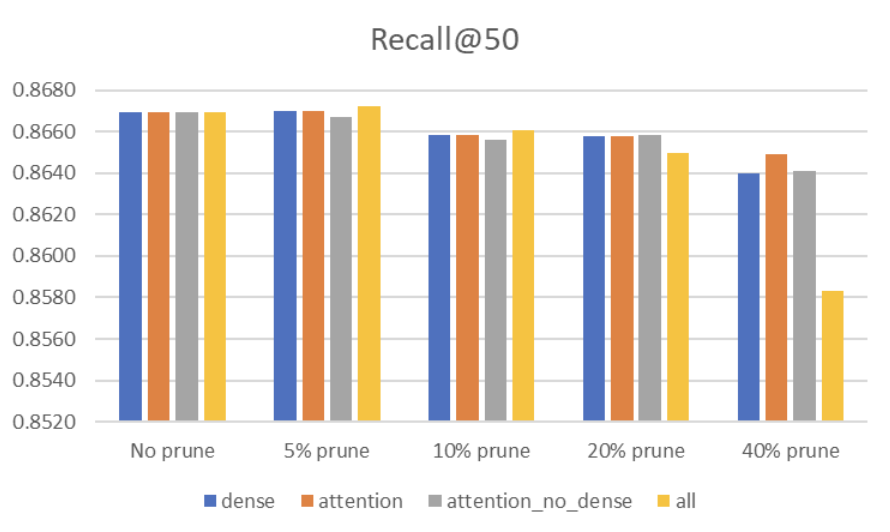

We attempted to improve upon ColBERT by creating pruning methods against ColBERT model layers.

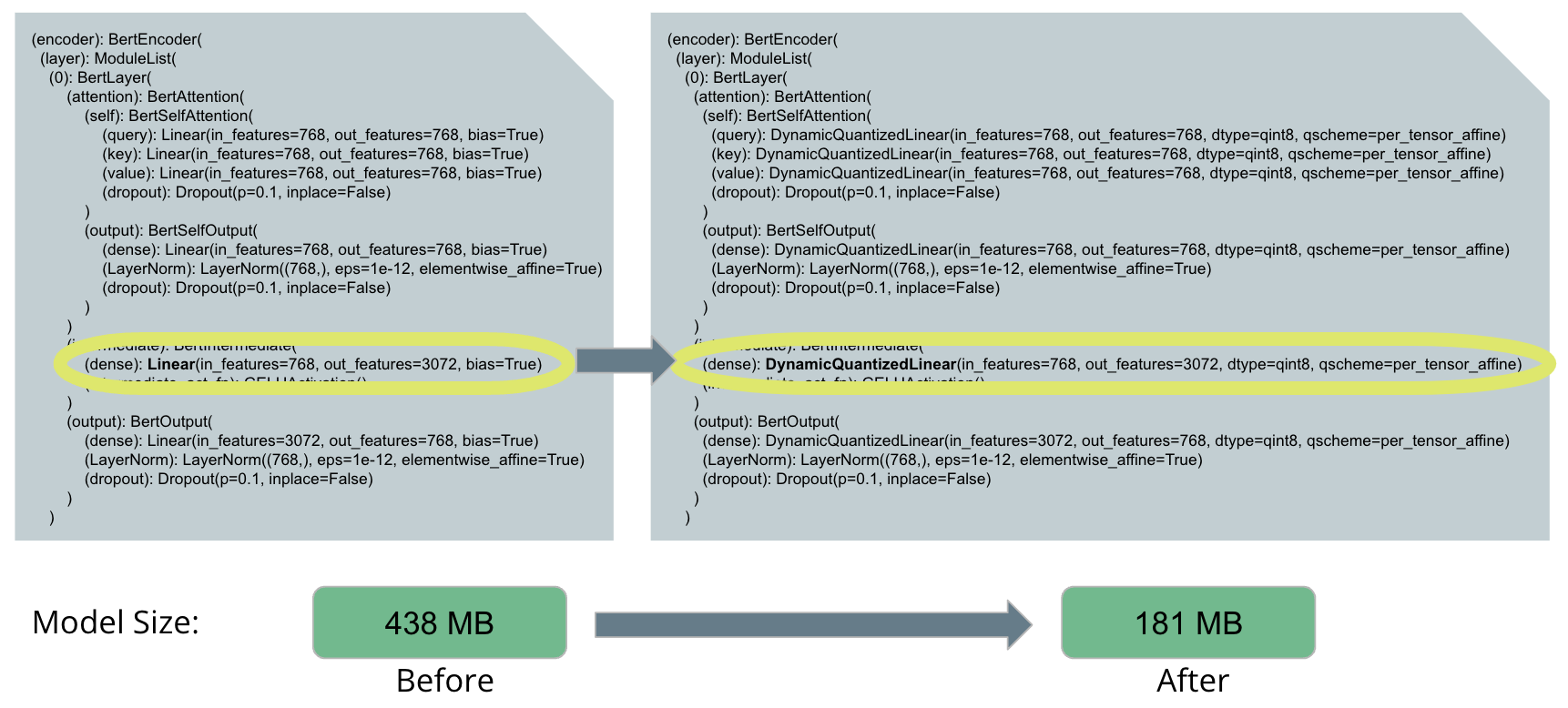

One of the challenges of using neural networks for information retrieval is the high computational demand of training neural networks. We used a quantization technique to reduce the model size and therefore reduce the hardware demands of the model.

Quantization of a model is the application of techniques for performing computations and storing tensors at lower bit-widths than floating point precision (i.e. using integers or smaller floating point values). This dramatically reduces both the memory requirement and computational cost of using neural networks. We applied dynamic quantization was post training and indexing.

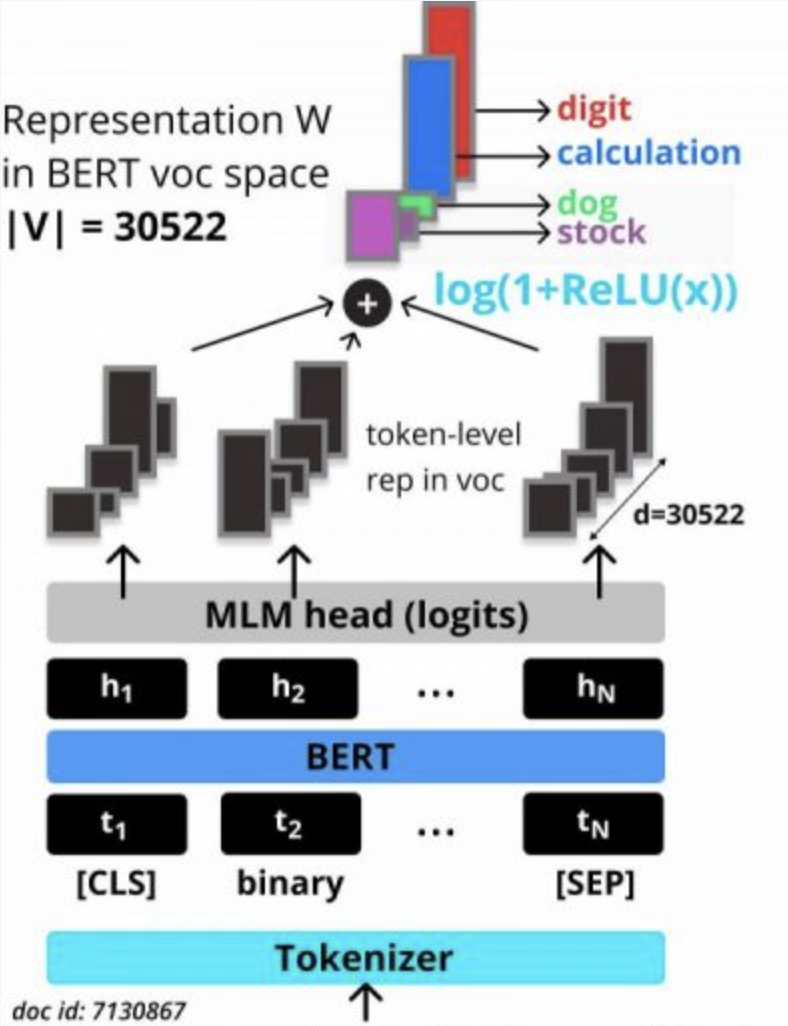

SPLADE is a neural IR method which uses sparse representations of queries and documents. Utilizing sparse vectors means SPLADE still has the desirable properties of Bag-of-Words based methods (traditional methods), like interpretability, exact term matching, and efficient use of inverted indexes. Below is an example of the SPLADE representation of a document.

| Query | Document | SPLADE BoW Representation |

|---|---|---|

| What is a corporation? | A corporation is a company or group of people authorized to act as a single entity and recognized as such in law. | [('corporation', 1.91), ('company', 1.29), ('single', 1.28), ('authorized', 1.21), ('entity', 1.01), ('group', 0.89), ('law', 0.82), ('recognized', 0.82), ('act', 0.8), ('a', 0.68), ('people', 0.44), ('as', 0.22)] |

The architecture of SPLADE is illustrated in the figure on the right. A document/query is tokenized and then input to BERT. The output embeddings are then projected back to the full vocabulary. Then the signals are summed and a log-saturation effect is applied to term weights. This process results in a single representation is that is used to compute the document-query score. SPLADE is able to perform information retrieval more efficiently than many neural IR methods while maintaining similar effectiveness (MRR and recall).

Our approach was to token-level transformer (BERT) with a sentence transformer (SBERT). This approach had a couple effects on the SPLADE model approach. First of all, the interpretability advantage of SPLADE was diminished because after using a sentence-level transformer we are no longer able to generate a token-level probability distribution to interpret why a document was included in the sparse index. Secondly, we hoped that the steps after the PLM would be more efficient since the model would not need to generate the probability distribution for each token (go through the hierarchical softmax and Concatenation step) and can instead it just produce the mean embeddings. However, this assumption turned out to be incorrect.

| Dataset Size | Model | MRR@10 | Recall@50 | Recall@200 | Recall@1000 | Avg Encoding Time per Query (in ms) |

|---|---|---|---|---|---|---|

| Toy dataset | ColBERT | 0.395149 | 0.866917 | 0.945977 | 0.976862 | 6.59 ms |

| Toy dataset | ColBERT Quantized | 0.387623 | 0.860005 | 0.942574 | 0.974558 | 5.17 ms |

| -0.01905 | -0.00797 | -0.0036 | -0.00236 | 1.27x faster | ||

| Full dataset | ColBERT | 0.397918 | 0.865142 | 0.945777 | 0.976369 | 6.56 ms |

| Full dataset | ColBERT Quantized | 0.388929 | 0.858006 | 0.941289 | 0.97341 | 3.91 ms |

| -0.02259 | -0.00825 | -0.00475 | -0.00303 | ~1.7x faster |

| Model | MRR@10 | FLOPS (Toy Dataset | FLOPS (MS TREC 2019) | Model Size (MB) |

|---|---|---|---|---|

| SPLADE with Sentence Transformer | 0.33 | 25,627 | 27,688 | 940 |

| SPLADE with Token-Level Transformer | 0.33 | 6,458 | 1,070 | 767 |

| Percent Change | 0% | -74% | -96% | -18% |